I thought I would write this post because every time I looked for an example of categorising text all I would find were sentiment analysis of news articles or Twitter feeds. That’s cool because you can get started without having your own data set, but I do have a real data set I need to work with!

For the past 9.5ish years I have been running Deal Shrimp, which aggregates and displays deals from around the web. I wanted some way to automatically put each Deal (product) into a category. Enter machine learning! Luckily I have a list of over 3,000 Deals to use as a training set, so that should be a good start.

I will be analysing the data by building a Keras/TensorFlow based neural network. I will go through the process of tokenising the title data (with stemming) then transforming it into a vector to be passed into the neural network. 80% of the data will be used for training with 20% for testing.

Follow along on Github: ml-product-categoriser/main.py

The Data

The data is stored in a CSV file, although it could just have easily been streamed from the database. The CSV file contains these columns:

deal_id title standard_price discount_price has_regions category_id site_name category_name

For the sake of an example/tutorial I will build a model that can be applied to different types of products. Therefore, I am using only the title of each Deal as a network input. The output will be mapped to the category_name.

A more accurate categoriser could be built by taking into account some of the other fields. For example, if a Deal has_regions, it is more likely to be a voucher for eating out or an experience than a physical product. Some sites will only sell certain types of products, so considering the site_name as well could help. There’s possibly also some information that could be useful by bucketing and categorising on the Deal price.

Cleaning the Title

The first step is cleaning, normalising and tokenising the title. This essentially means removing irrelevant/common words, and taking the remaining words back to their root (stemming). The stemmer is a Porter Stemmer, and it works by removing plurals and suffixes. For example, car and cars both become car; body and bodies become bodi. Sometimes the stemmer can be over-zealous so evaluating different ones to suit your needs can be a good idea.

The implementation of this tokenising is in the tokenize_title function.

Some examples of how input titles are transformed to tokens are below:

A Professional Oven Clean incl. Product or from $59 for a Home Window Clean

(value up to $220) ->

{'oven', 'clean', 'profession', 'window'}

Day Pass (value up to $66) ->

{'pass', 'day'}

A $40 Food & Beverage Voucher ->

{'beverag', 'food'}

A Two-Hour Full Body Deluxe Pamper Package (value up to $200) ->

{'packag', 'hour', 'pamper', 'delux', 'bodi'}

A Two-Hour Massage Pamper Package incl. Full-Body Hot Stone Massage,

Shellac™ Manicure & Foot Ritual (value up to $308) ->

{'hot', 'packag', 'massag', 'hour', 'shellac', 'stone', 'ritual', 'foot',

'manicur', 'pamper', 'bodi'}

18pc Educational Electronic Blocks Kit for Kids ->

{'educ', 'kid', 'electron', 'block'}"

A Tongariro Stand Up Paddleboard Adventure (value up to $50) ->

{'paddleboard', 'adventur', 'tongariro', 'stand'}

`

For my purposes I decided the order of words were not important so they are returned as a set.

Input Vectorisation

A neural network expects a vector of numbers as an input. In this case, I will use a binary input vector for each title, where 1 indicates that the word exists in the title and 0 does not. The “lookup” array will be a vector containing all the tokens of all the titles (with length N).

To illustrate, here’s an example (for readability I am using non-stemmed tokens). Let’s say there are three Deals:

{'hot', 'stone', 'massage'}

{'pamper', 'massage', 'package'}

{'manicure', 'pamper', 'day'}

We iterate over each Deal and append each of its tokens to an array, if that token is not already in the array. The resulting array for these three is:

['hot', 'stone', 'massage', 'pamper', 'package', 'manicure', 'day']

This array has length N. Each Deal is then transformed into an array (also of length N) with 0s or 1s in the positions corresponding to the tokens it has. In this case, referring back to the three examples above:

[1, 1, 1, 0, 0, 0, 0] [0, 0, 1, 1, 0, 0, 0] [0, 0, 0, 1, 0, 1, 1]

In our case the lookup array is 4,209 tokens long, so the neural network has 4,209 inputs. The training input is an array of 2,600ish of these 4,209 length binary vectors. The title_vectors array is split into training_title_vectors (the first 80%) and test_title_vectors (the remaining 20%).

Ouput Vectorisation

The outputs do not need to be vectorised, they are fed in as integers that represent their index in a category lookup array. The output from the neural network is a vector of probabilities though.

For example, the category lookup array might be:

['car', 'clothing', 'house and garden']

The output vector of [0.4. 0.1, 0.5] would map to home and garden; [0.8, 0.1, 0.1] would be car.

Use numpy.argmax to get the index of the highest prediction and map it back to the category lookup array.

Building the Neural Network

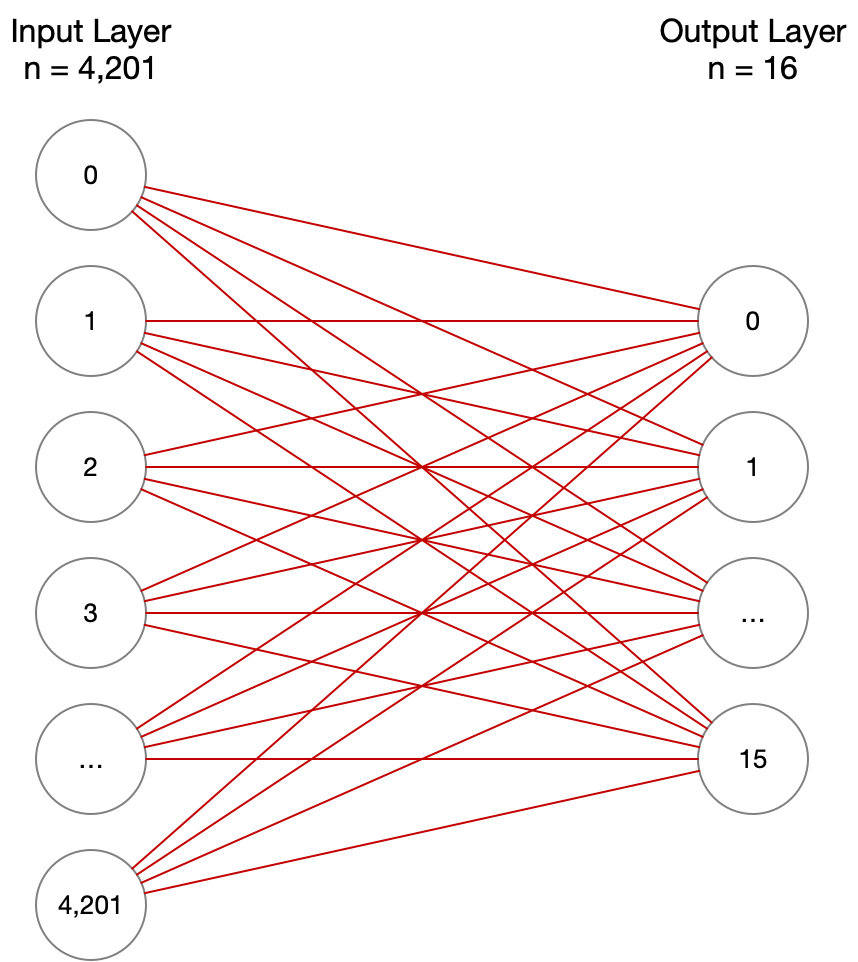

Now that we know how many input neurons (4,209) and output neurons (16, the number of categories) there are, we can draw a picture of the neural network. Note: this step is not necessary. You can train your neural network without having a picture of it.

I experimented with the addition of a hidden layer of different sizes and it didn’t drastically change the output so the neural network is simple and doesn’t use one.

Using Keras allows us to easily define the network as sequential models. Refer to the build_model function to find these next three code snippets.

model = keras.Sequential([

keras.layers.Dense(len(words), tf.nn.relu),

keras.layers.Dense(len(categories_names), tf.nn.softmax)

])

This is a Neural Network with two layers, one input layer with len(words) (4,201) inputs, and an output later with len(categories_names) (16) outputs.

Once the model is defined it can then be compiled.

model.compile(optimizer='nadam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

I found that changing the optimizer either didn’t affect the final accuracy too much (as you might expect, adam and nadam are fairly similar) or completely decimated it (by choosing sgd, for example). You can choose an optimizer that gives you the best results. The loss function should be kept as sparse_categorical_crossentropy since we have multi-categorisation without one-hot encoding input.

Training and Testing

To train the model, just run:

model.fit(training_titles, training_categories, epochs=5, batch_size=32)

The epochs can be adjusted based on how quickly your neural network converges on an accurate result. In my testing, I get 98% after just 2 epochs and then up to 99% after 5, so I could potentially only use 2 epochs to speed up training time. However on my machine (2018 MacBook Pro with 2.3Ghz i5) it only takes about 30 seconds to train the model with 5 epochs. Depending on how much data you have, and your acceptable accuracy, you can choose how best to train your model.

While training you should get nice output showing progress.

Epoch 1/5 2896/2896 [==============================] - 16s 6ms/sample - loss: 1.2419 - acc: 0.6578 Epoch 2/5 2896/2896 [==============================] - 16s 6ms/sample - loss: 0.2001 - acc: 0.9427 Epoch 3/5 2896/2896 [==============================] - 16s 6ms/sample - loss: 0.0693 - acc: 0.9814 Epoch 4/5 2896/2896 [==============================] - 17s 6ms/sample - loss: 0.0463 - acc: 0.9862 Epoch 5/5 2896/2896 [==============================] - 16s 5ms/sample - loss: 0.0344 - acc: 0.9900

Then I used the 20% of data that I didn’t train with to evaluate the model.

724/724 [==============================] - 0s 377us/sample - loss: 1.2337 - acc: 0.7307

So the accuracy is 73%.

Having a look at some of the Deals the model predicts incorrectly, it’s not always that big of a deal (hah) and I can see why it might get things wrong. Sometimes even when going through and categorising the Deals manually to set up the training data it was tough to choose between two categories, so I kind of expect the neural network to make similar errors.

High Tea Party Experience at the Hilton on 12 or 13 November 2016 - General Ticket from $59, Options Available for 1, 2, 4 or 8 People (Value Up To $1540). Includes Bubbly and Cocktails, Gifts, Fashion Show, High Tea Sitting, Pampering and More

Predicted: Experiences, Actual: Eating & Drinking

From $99 for a Car Stereo Installed, Options for JVC & American Hi Fi Stereos Available from [store]

Predicted: Electronics & Computers, Actual: Automotive

Some it can’t decide if the Deal is for an experience or a meal:

One Jumbo Bucket of Golf Balls, One Gourmet Burger & Fries OR $25 for One Jumbo Bucket of Golf Balls, One Gourmet Burger, Fries & One Premium Beer OR Wine (value up to $42)

Predicted: Eating & Drinking, Actual: Sports & Fitness

And some it just got plain wrong:

Deep Sea, Cook Strait & Wellington Harbour Fishing Trips with Experts [redacted] Fishing (value up to $3800)

Predicted: Cooking, Actual: Fishing & Diving

I can guess this is because it sees the Cook in Cook Strait, and also because the person’s name I have redacted is also a type of meat.

Improvements

On reviewing these I do have some ideas for improving the accuracy.

Stemming

When I see fish and fishing, it is probable that the former would refer to an Eating & Drinking Deal, whereas the latter refers to a Fishing & Diving Deal. However when both these words are stemmed, they become fish, so both would be the same input to the neural network. A better stemmer would probably be able to tell the difference between these somehow. Or maybe just code it to exlude words ending in “ing” from being stemmed.

Tagging / Multi Categories

In instances where a Deal could belong to multiple categories or it is not clear which category is best, it could be assigned multiple categories or tags. Then, the output would be vectorised too, so that multiple categories were output. Whether or not this would give better results than chucking a Deal into a good-but-not-perfect category I am not sure.

If your data is more distinct into which categories it fits, you might have better results.

Caveats

The main issue to be aware of is that if a Deal comes in with a new word your neural network will need to be rebuilt and retrained. This is because you will now require N+1 inputs. Take our former massage and manicure example. Let’s add a “cold stone massage” Deal. Your input vector lookup now looks like this:

['hot', 'stone', 'massage', 'pamper', 'package', 'manicure', 'day', 'cold']

And your four input Deal vectors like this:

[1, 1, 1, 0, 0, 0, 0, 0] [0, 0, 1, 1, 0, 0, 0, 0] [0, 0, 0, 1, 0, 1, 1, 0] [0, 1, 1, 0, 0, 0, 0, 1] # cold stone massage

So you need to rebuild and retrain with your new vectors and data. If this takes only 30 seconds it’s not that big of a deal, and it’s probably good to get a fresher and more accurate picture of your data anyway.

Also if you have a big enough dataset to begin with you might find that the likeliness of getting new words decreases anyway. Stemming words back to their roots means there are less unique variations as well.

Conclusion

Keras and Tensorflow make building neural networks super easy. As is common with machine learning, getting good, clean data to train with is the more common problem. You should be able to follow my example with your own data and get similar results. You can find the code on Github. Unfortunately, as I said, the hard part of machine learning is getting training data, and for privacy reasons I can’t release mine. Sorry! But, this should be a good jumping off point for working with your own data.

Please do get in contact if you have your own data and want assistance in analysing it, or even an insight into what data you should be capturing in the first place.